This method was first developed by the mathematicians Legendre and Gauss in the early 19th century, who used it to predict the orbits of heavenly bodies using observed data. For example, linear regression finds the “best fit” by finding the parameters a and b that minimize the sum of the squares of the residuals.

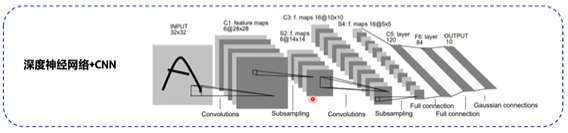

Residuals or “errors” are an important consideration in modeling since they often drive the methods used for parameter calculation. This is also referred to as the “error” term since it is the difference between the actual cost and the estimated cost. We provide pros and cons of alternatives to regression, and a cross-sectional example that illustrates the similarities and differences of a variety of techniques outside of the traditional methodology. In this paper, we look at a variety of methods for predictive analysis for cost estimating, including other supervised methods such as neural networks, deep learning, and regression trees, as well as unsupervised methods and reinforcement learning. Indeed, traditional linear and nonlinear regression is a small subset of supervised machine learning methods. However, regression analysis is only one of many tools in data science and machine learning. In this paper we look at a variety of methods for predictive analysis for cost estimating, including other supervised methods such as neural networks, deep learning, and regression trees, as well as unsupervised methods and reinforcement learning.Ībraham Maslow famously wrote in his classic book The Psychology of Science, “I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.”Ĭost estimating has relied primarily upon regression analysis for parametric estimating.

However, regression analysis is only one of many tools in data science and machine learning and is a small subset of supervised machine learning methods. Of course, for serious analysis, I would still recommend you to use proper tools for that.Cost estimating has relied primarily upon regression analysis for parametric estimating. That being said, there is still a good amount of algorithms you can implement with Excel for fun. There are numerical stability issues, it is tricky to write loops (without VBA), it is a pain to write lengthy functions in Excel and there's no concept of structure so I wouldn't try to build things like Random Forest. If you are really inclined to try you could refer to the following link:

#Neural network using excel solver how to#

All you need is to learn how to use the Excel Solver, and the built-in matrix functions for vectorized computations.įor example, Neural Networks and Logistic Regressions are particularly easy to build due to the simplicity of their objective function. If your data size is reasonably small (say <10k rows and not too many columns), it is in fact pretty quick and easy to build certain ML models within Excel. Excel comes with the Solver add-in which is pretty handy for lightweight problems, so it is entirely possible for you to build a Machine Learning model within Excel! (I've done it myself) (Most) Machine Learning algorithms are essentially optimization problems where you minimize/maximize an objective function subject to certain constraints.

0 kommentar(er)

0 kommentar(er)